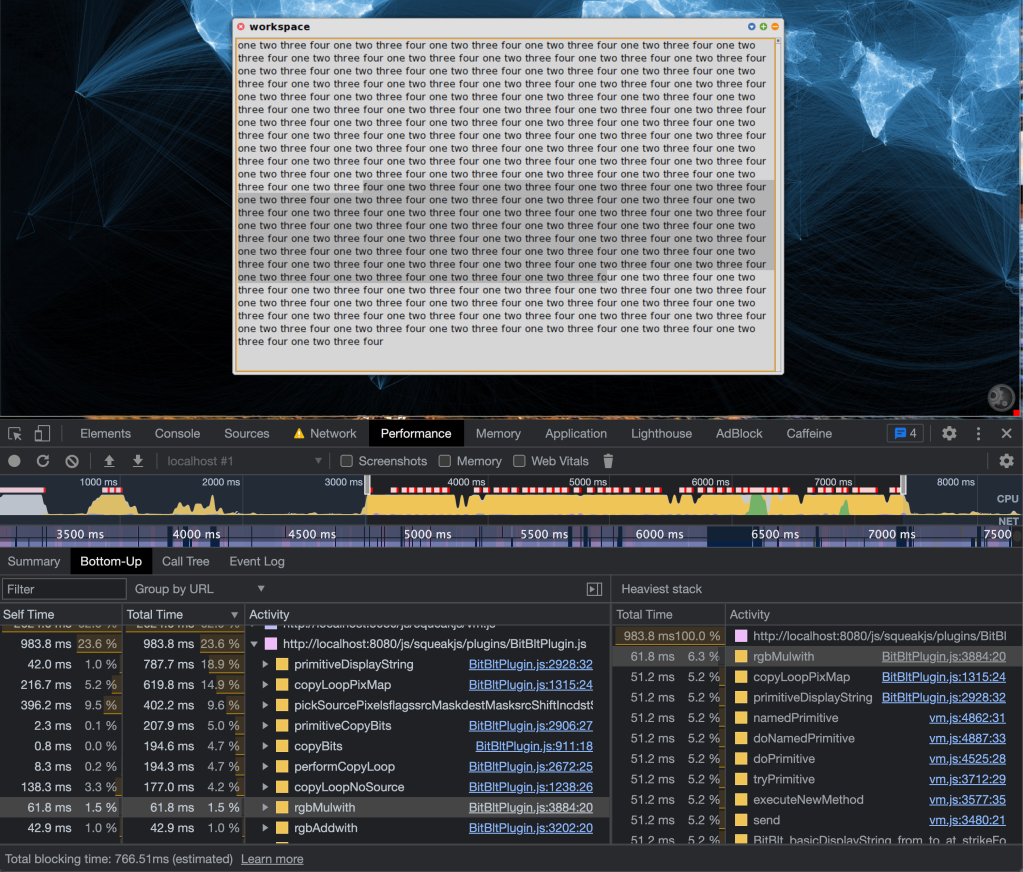

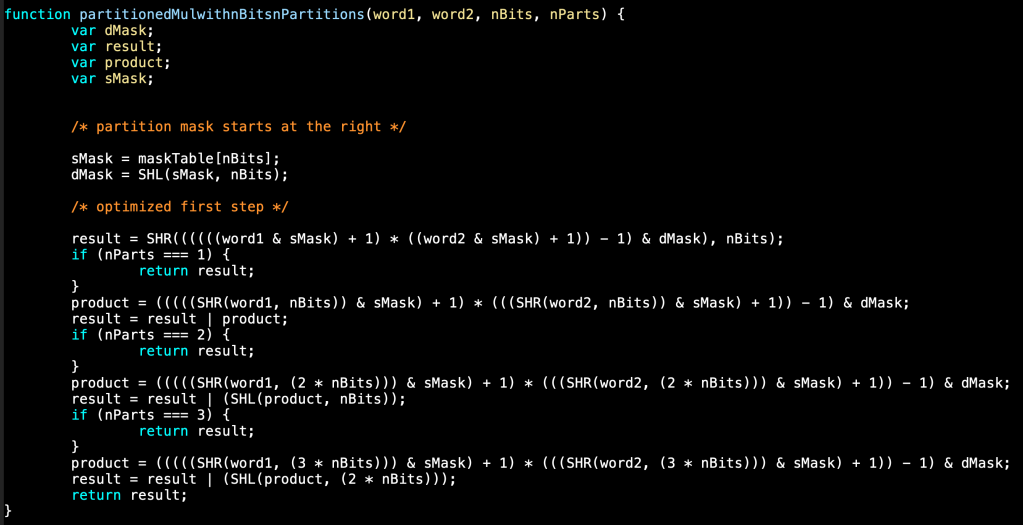

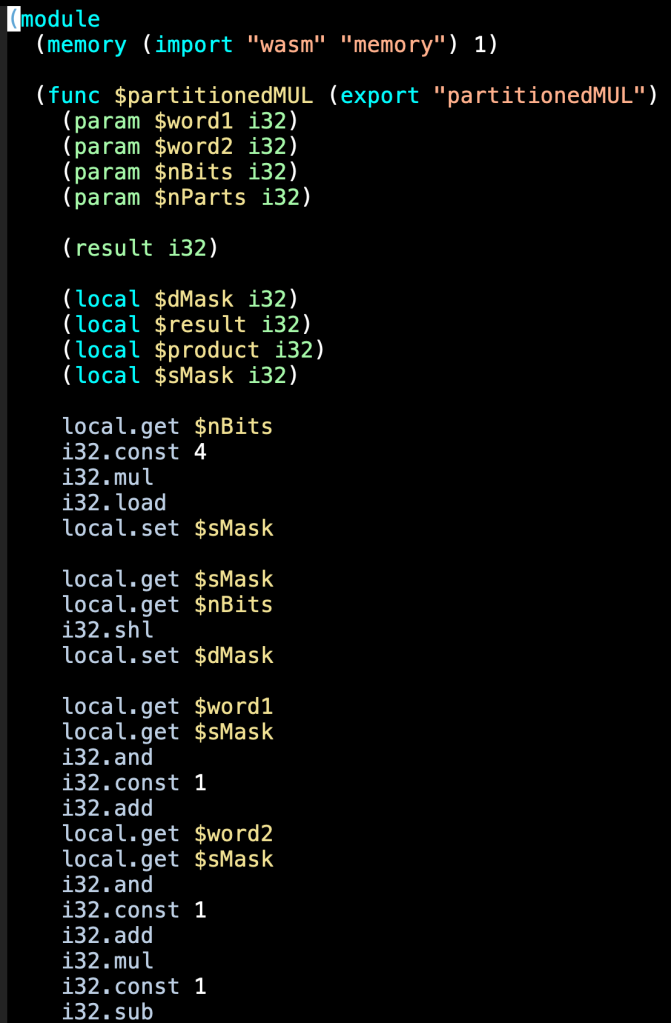

It’s straightforward to apply WebAssembly (WASM) to isolated JavaScript hotspots, where there are no side-effects. This enables us to speed up sections of the SqueakJS primitives, like BitBLT, which perform pure functions. The SqueakJS interpreter code, on the other hand, is rife with side-effects. The act of interpretation modifies the deep graph of connected structures which is the Smalltalk object memory.

In my first WASM-enabled SqueakJS virtual machine, I wrote a WASM version of the JS function that interprets a single Smalltalk instruction, interpretOneWASM. To perform the necessary interactions with the object memory, that WASM function called the original JS support functions. That approach yielded a working virtual machine, but with a high performance penalty. Since JS and WASM can use shared memory, I’m curious to see if we can eliminate the JS function calls in interpretOneWASM by using a synchronized linear representation of object memory, and if this would speed up the virtual machine.

I’ve modified SqueakJS so that it maintains a shared WASM memory buffer, into which it writes and updates serializations of the objects in object memory. Now, interpretOneWASM can read and write the object information it needs without having to make most of the previous JS calls. At the moment, some primitives (like garbage collection) are still JS calls. Since they were relatively long-running operations already, I don’t expect performance to suffer much.

The format of the shared linearized object memory is nearly identical to that of an object memory snapshot, with two differences. The first is that only one object header word is written in all cases; WASM doesn’t need the additional object header information supplied in a snapshot for re-creating objects, since it doesn’t do that. The second difference is that young objects, with negative addresses, are also represented, in a special memory segment above the tenured objects. This scheme allows WASM to access all objects by address.

When WASM modifies the object memory, it records the addresses of the modified objects in a special object. The JS side uses this information to updates the canonical JS object memory representation. If at some point we implement all of the JS functions in WASM, we’ll be able to make the linear representation the only one. In the meantime, we have a framework for using both JS and WASM to their strengths, and transitioning from JS to WASM gradually.

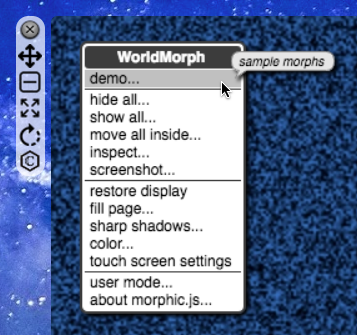

The user can choose dynamically whether the JS or the WASM version of the interpretOne function is in use. At the moment, we let JS read the object memory snapshot and get the system started, then switch to WASM after the initial context is loaded and running. JS also writes the entire linearized object memory into the shared WASM buffer. The user can switch interpretOne from JS to WASM by evaluating a Smalltalk expression that invokes a JS function of the interpreter (“JS display vm useWASM”).

It’ll be interesting to see if this scheme yields an overall increase in system speed. Hopefully, with this gradual transition, we’ll be able to tell if a full conversion of SqueakJS from JS to WASM is worthwhile, before investing a lot of effort into rewriting the JS functions.