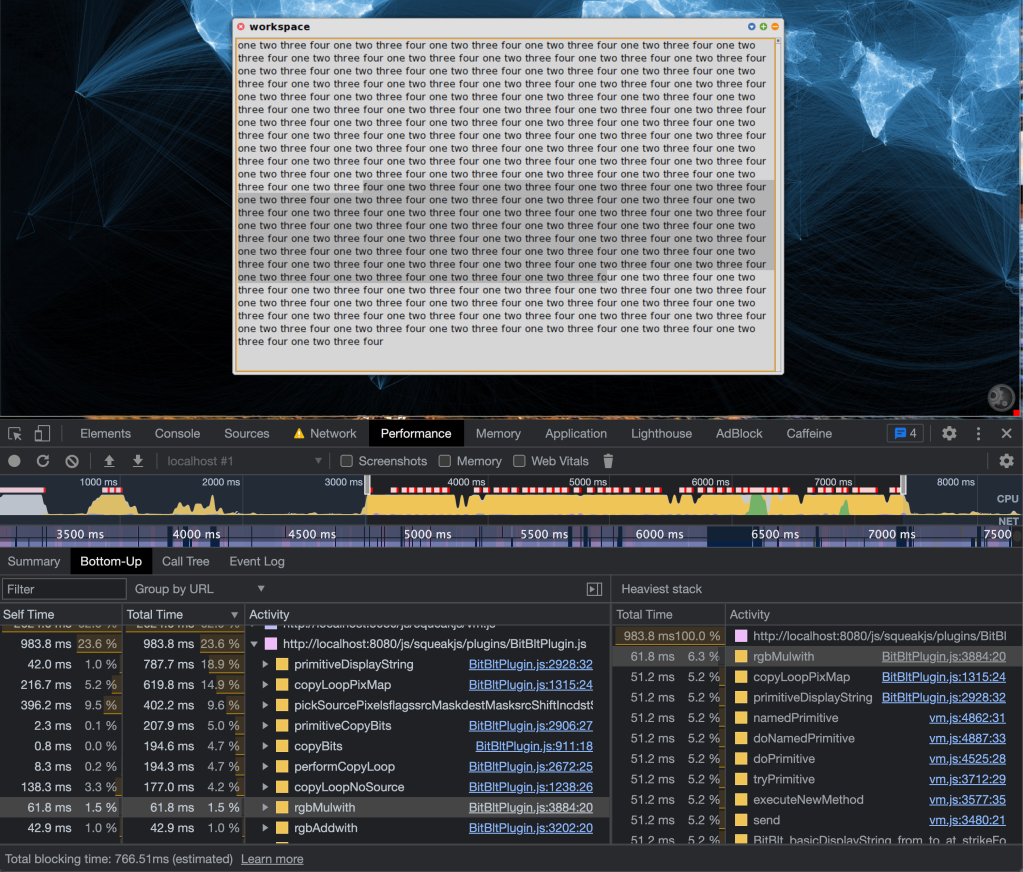

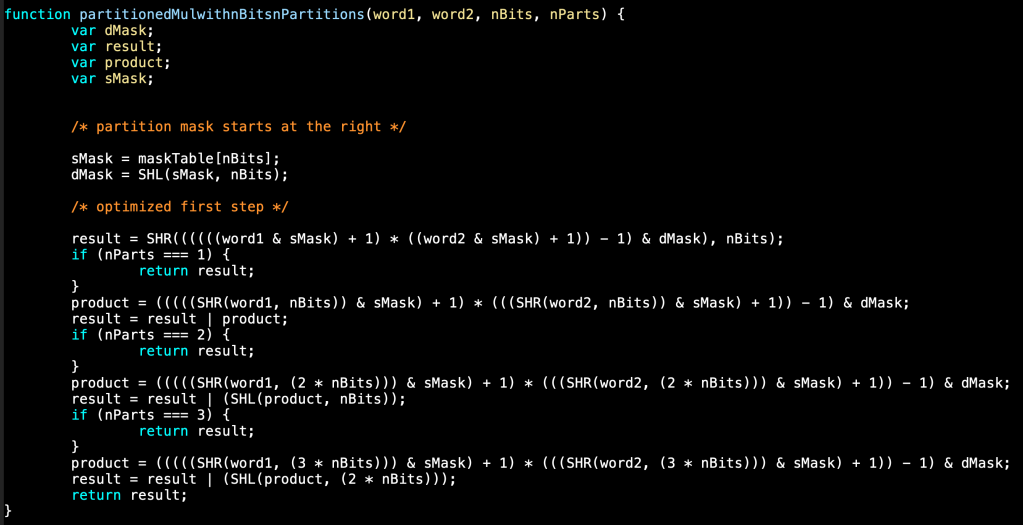

In Catalyst, a WebAssembly implementation of the OpenSmalltalk virtual machine, there are three linguistic levels in play: Smalltalk, JavaScript (JS), and WebAssembly (WASM). Smalltalk is our primary language, JS is the coordinating language of the hosting environment (a web browser), and WASM is a high-performance runtime instruction set to which we can compile any other language. In a previous article, I wrote about automatic translation of JS to WASM, as a temporary way of translating the SqueakJS virtual machine to WASM. That benefits from a proven JS starting point for the relatively large codebase of the virtual machine. When translating individual Smalltalk compiled methods for “just-in-time” optimization, however, it makes more sense to translate from Smalltalk to WASM directly.

compiled method transcription

We already have infrastructure for transcribing Smalltalk compiled methods, via class InstructionStream. We use it to print human-readable descriptions of method instructions, and to simulate their execution in the Smalltalk debugger. We can also use it to translate a method to human-readable WebAssembly Text (WAT) source code, suitable for translation to binary WASM code which the web browser can execute. Since the Smalltalk and WASM instruction sets are both stack-oriented, the task is straightforward.

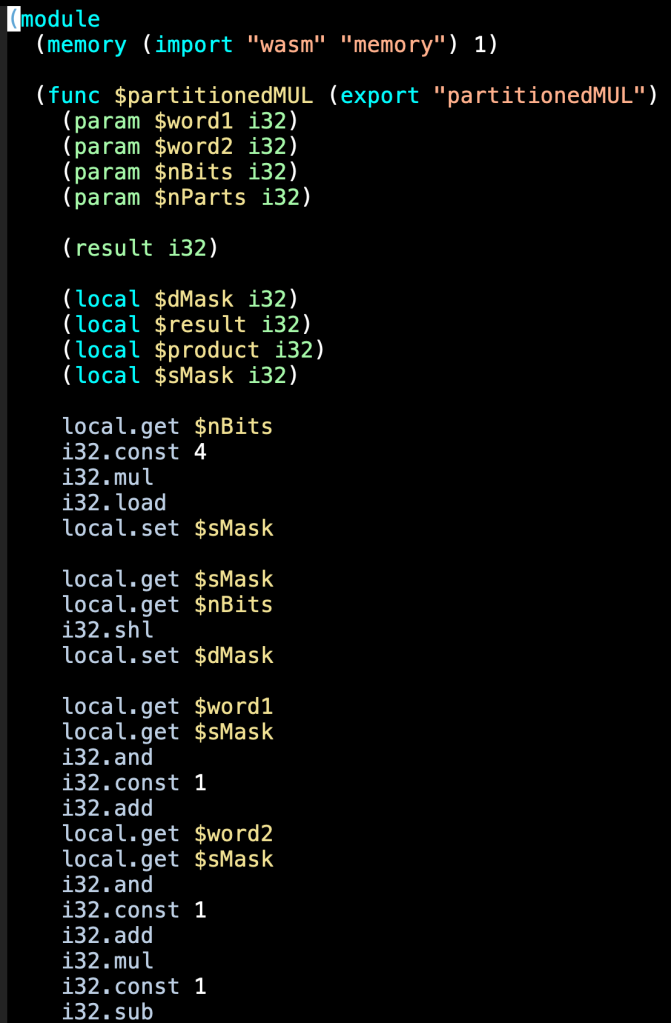

I’ve created a subclass of InstructionStream, called WATCompiledMethodTranslator, which uses the classic scanner pattern to drive translation from Smalltalk instructions to WASM instructions. With accompanying WASM type information for Smalltalk virtual machine structures, we can make WASM modules that execute the instructions for individual Smalltalk methods.

the “hello world” of Smalltalk: 3 + 4

As an example, let’s take a look at translating the traditional first Smalltalk expression, 3 + 4. We’ll create a Smalltalk method in class HelloWASM from this source:

HelloWASM>>add

"Add two numbers."

^3 + 4

This gives us a compiled method with the following Smalltalk instructions. On each line below, we list the program counter value, the instruction, and a description of the instruction.

0: 0x20: push the literal constant at index 0 (3) onto the method's stack

1: 0x21: push the literal constant at index 1 (4) onto the method's stack

2: 0xB0: send the arithmetic message at index 0 (+)

3: 0x7C: return the top of the method's stack

A WATCompiledMethodTranslator uses an instance of InstructionStream as a scanner of the method, interpreting each Smalltalk instruction in turn. When interpreting an instruction, the scanner sends a corresponding message to the translator, which in turn writes a transcription of that instruction as WASM instructions, onto a stream of WAT source.

The first instruction in the method is “push the literal constant at index 0”. The scanner finds the indicated literal in the literal frame of the method (i.e., 3), and sends pushConstant: 3 to the translator. Here are the methods that the translator runs in response:

WATCompiledMethodTranslator>>pushConstant: value

"Push value, a constant, onto the method's stack."

self

comment: 'push constant ', value printString;

pushFrom: [value printWATFor: self]

WATCompiledMethodTranslator>>pushFrom: closure

"Evaluate closure, which emits WASM instructions that push a value onto the WASM stack. Emit further WASM instructions that push that value onto the Smalltalk stack."

self

setElementAtIndexFrom: [

self

incrementField: #sp

ofStructType: #vm

named: #vm;

getField: #sp

ofStructType: #vm

named: #vm]

ofArrayType: #pointers

named: #stack

from: closure

WATCompiledMethodTranslator>>setElementAtIndexFrom: elementIndexClosure ofArrayType: arrayTypeName named: arrayName from: elementValueClosure

"Evaluate elementIndexClosure to emit WASM instructions that leave an array index on the WASM stack. Evaluate elementValueClosure to emit WASM instructions that leave an array element value on the WASM stack. Emit further WASM instructions, setting the element with that index in an array of the given type and variable name to the value."

self get: arrayName.

{elementIndexClosure. elementValueClosure} do: [:each | each value].

self

indent;

nextPutAll: 'array.set $';

nextPutAll: arrayTypeName

In the final method above, we finally see a WASM instruction, array.set. The translator implements stream protocol for actually writing WAT text to a stream. The comment:, get:, and getField:ofStructType:named: methods are similar, using “;;” and the array.get and struct.get WASM instructions. The array and struct instructions are part of the WASM garbage collection extension, which introduces types.

WASM types for virtual machine structures

To actually use WASM instructions that make use of types, we need to define the types in our method’s WASM module. In pushFrom: above, we use a struct variable of type vm named vm, and an array variable of type pointers named stack. The vm variable holds global virtual machine state (for example, the currently executing method’s stack pointer), similar to the SqueakJS.vm variable in SqueakJS. The stack variable holds an array of Smalltalk object pointers, constituting the current method’s stack. In general, the WASM code for a Smalltalk method will also need fast variable access to the active Smalltalk context, the active context’s stack, the current method’s literals, and the current method’s temporary variables.

Our WASM module for HelloWASM>>add might begin like this:

(module

(type $bytes (array (mut i8)))

(type $words (array (mut i32)))

(type $pointers (array (ref $object)))

(type $object (struct

(field $metabits (mut i32))

(field $class (ref $object))

(field $format (mut i32))

(field $hash (mut i32))

(field $pointers (ref $pointers))

(field $words (ref $words))

(field $bytes (ref $bytes))

(field $float (mut f32))

(field $integer (mut i32))

(field $address (mut i32))

(field $nextObject (ref $object))))

(global $vm (struct

(field $sp (mut i32))

(field $pc (mut i32)))

(global $stack (array (ref $pointers)))

(function $HelloWASM_add

;; pc 0

;; push constant 3

global.get $stack

global.get $vm

global.get $vm

struct.get $vm $sp

i32.const 1

i32.add

struct.set $vm $sp ;; increment the stack pointer

global.get $vm

struct.get $vm $sp

i32.const 3

array.set $pointers

;; pc 1

...

As is typical with assembly-level code, there’s a lot of setup involved which seems quite verbose, but it enables fast paths for the execution machinery. We’re also effectively taking on the task of writing the firmware for our idealized Smalltalk processor, by setting up interfaces to contexts and methods, and by implementing the logic for each Smalltalk instruction. In a future article, I’ll discuss the mechanisms by which we actually run the WASM code for a Smalltalk method. I’ll also compare the performance of dynamic WASM translations of Smalltalk methods versus the dynamic JS translations that SqueakJS makes. I don’t expect the WASM translations to be much (or any) faster at the moment, but I do expect them to get faster over time, as the WASM engines in web browsers improve (just as JS engines have).