AI-assisted just-in-time compilation in Catalyst

There’s a long tradition of just-in-time compilation of code in livecoding systems, from McCarthy’s LISP systems in the 1960s, to the dynamic method translation of the Deutsch-Schiffman Smalltalk virtual machine, to the “hotspot” compilers of Self, Strongtalk and Java, to current implementations for JavaScript and WebAssembly in Chrome’s V8 and Firefox’s SpiderMonkey. Rather than interpret sequences of virtual machine instructions, these systems translate instruction sequences into equivalent (and ideally more efficient) actions performed by the instructions of a physical processor, and run those instead.

We’d like to employ this technique with the WASM GC Catalyst Smalltalk virtual machine as well. Translating the instructions of a Smalltalk compiled method into WASM GC instructions is straightforward, and there are many optimizations we can specify ahead of time for optimizing those instructions. But with the current inferencing abilities of artificial intelligence large language models (LLMs), we can leave even that logic until runtime.

dynamic method translation by LLM

Since Catalyst runs as a WASM module orchestrated by SqueakJS in a web browser, and the web browser has JavaScript APIs for WASM interoperation, and there are JS APIs for interacting with LLMs, we can incorporate LLM inference in our translation of methods to WASM functions. We just need an expressive system for composing appropriate prompts. Using the same Epigram compilation framework that enables the decompilation of the Catalyst virtual machine itself into WASM GC, we can express method instructions in a prompt, by delegating that task to the reified instructions themselves.

For an example, let’s take the first method developed for Catalyst to execute, SmallInteger>>benchmark, a simple but sufficiently expensive benchmark. It repeats this pattern five times: add one to the receiver, multiply the result by two, add two to the result, multiply the result by three, add three to the result, and multiply the result by two. This is trivial to express as a sequence of stack operations, in both Smalltalk instructions and WASM instructions.

Our pre-written virtual machine code can do the simple translation between those instruction sets without using an LLM at all. With a little reasoning, an LLM can recognize from those instructions that something is being performed five times, and write a loop instead of inlining all the operations. With a little more reasoning, it can do a single-cycle analysis and discover the algebraic relationship between the receiver and the output (248,832n + 678,630). That enables it to write a much faster WASM function of five instructions instead of 62.

the future

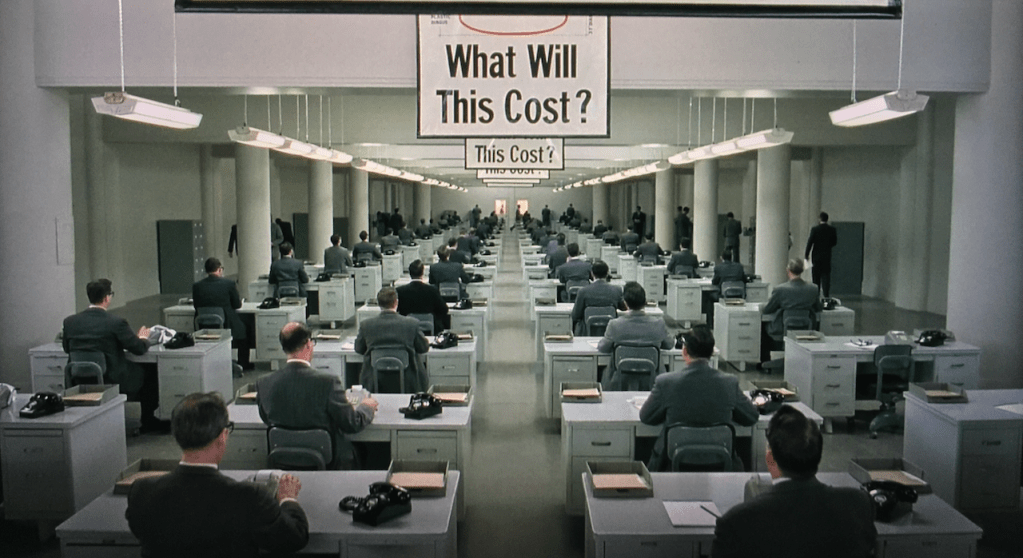

This is a contrived example, of course, but it clearly shows the potential of LLM-assisted method translation, at least for mathematical operations. I’ve confirmed that it works in Catalyst, and used the results to populate a polymorphic inline cache of code to run instead of interpretation. Drawing inspiration from the Self implementation experience, what remains to be seen is how much time and money is appropriate to spend on the LLM. This can only become clear through real use cases, adapting to changing system conditions over time.

22 July 2025 at 8:35 am

[…] « Catalyst update: a WASM GC Smalltalk virtual machine and object memory are running AI-assisted just-in-time compilation in Catalyst […]

LikeLike

10 September 2025 at 8:03 am

[…] object memory I’m running in the simulation is the same one I created in the initial handwritten version of the WASM GC virtual machine. It runs a simple polynomial benchmark method that could be […]

LikeLike