a Tethered server-side display

Tether is Caffeine‘s remote messaging protocol. It enables messaging between multiple Caffeine systems running in web browsers, other JavaScript runtimes, or native apps, using TCP sockets, Web Sockets, or WebRTC data channels. With the Deno JavaScript runtime, Caffeine can run server-side in a Web Worker thread, with the main thread providing a websocket for Caffeine to use, and acting as a bridge for Tether traffic between the worker and connected clients. Every system speaking the protocol does so with a local instance of class Tether.

Usually, the services provided by the worker Caffeine don’t involve graphics, and the system is headless. However, we can also perform all the traditional graphics behavior, using a remote Caffeine instance as the display.

a networked graphics pipeline

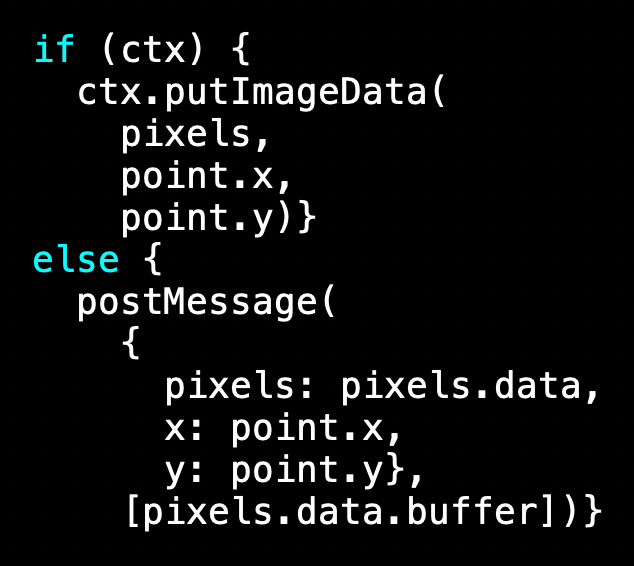

The main Deno thread, the bridge, communicates with the Caffeine worker thread through message handlers invoked on each side with postMessage(). The bridge communicates with a client Caffeine system over a websocket, TCP socket, or WebRTC data channel. The key to remote display is in the worker’s implementation of interpreter.showFormAt():

When the system is headless, the HMTL canvas graphics context that would normally be used for image data is null. The interpreter instead uses postMessage() to pass the pixel data to the bridge. The bridge can then broadcast those pixels to interested clients. Normally, the bridge only relays remote messages and their answers amongst the worker and the clients. We can consider a display update as the parameter for an invocation of a virtual block closure callback, handled specially by each receiving Tether. When such an update is received by a system hosted in a web browser, the Tether puts image data to the graphics context of a local HTML canvas, just as the original system would have done normally. The worker’s BitBLT is invoked normally to calculate the pixels, and can use WebAssembly to speed things up.

on-demand display enables livecoded server development

When the worker is truly headless, with no graphics-interested clients connected to the bridge, we don’t have to pay the cost of BitBLT/WASM graphics calculations. We can tell from the worker’s Smalltalk object memory that the system is headless, and avoid even asking the interpreter to calculate pixels. But a display can be provided on demand, enabling the livecoding of server apps. This makes the development process much more efficient, by eliminating the loops of implementation and testing that an always-headless server would cause.

With this ability, I’m writing a dynamic MCP server framework that accepts websocket connections from AI models, and mediates model communication with the subject app. Being able to livecode this will provide further efficiency benefits, by never having to interrupt the model’s interaction with either the MCP server or the subject app.

What would you use it for?

Leave a comment